“One general law, leading to the advancement of all organic beings, namely, multiply, vary, let the strongest live and the weakest die.”

– Charles Darwin, On the Origin of the Species

Note: I originally started writing this article all the way back in 2016, got distracted and left it on the shelf. The current furore about ChatGPT, Midjourney, Dall-E, and all the other generative AIs has inspired me to dust it off, update it and ship it.

Artificial Intelligence is a curious thing. At once we are bombarded with evidence of stunning new applications of machine learning, warned of the existential threat that it might pose to humanity, but told that artificial ‘general’ intelligence — the type of self-awareness that clever monkeys like us possess — is only a distant possibility.

While I don’t disagree with any of those views, I’d like to paint a different picture — that the evolution of a non-organic intelligence which surpasses human abilities is not only likely, but inevitable.

“From so simple a beginning endless forms most beautiful and most wonderful have been, and are being, evolved.”

– Charles Darwin, On the Origin of the Species

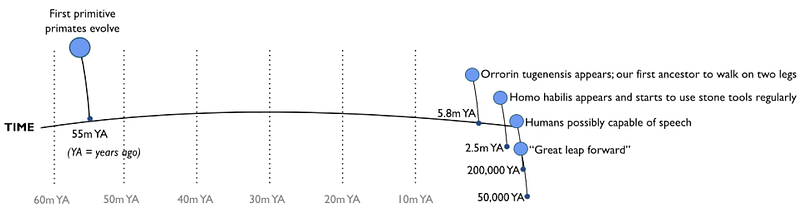

55 million years ago, the first primates evolved. A yawning fifty million years later orrorin tugenensis, a chimp size, tree-dwelling primate, and possibly our first bipedal ancestor trotted onto the scene.

Generation by generation, hominids evolved and our brains developed. Over seven million years the human brain has tripled in size, with most of this growth occurring in the past two million years — the final third of our evolution responsible for most of the growth in brain size.

2.5 million years ago we started to use simple stone tools; Homo habilis, one of the first of our ancestors to share our ‘Homo’ genus, appeared two million years ago, proudly showing off a modest increase in brain size.

It’s in the last half-million years that a period of diversity in the Homo genus occurred, with many ‘first’ and ‘last’ appearances of our evolutionary cousins. Around 250,000 years ago, homo sapiens (so-called, ‘wise man’) turned up and immediately started a bar fight with homo neanderthalis which dragged on until around 30,000 years ago. We emerged victorious, despite the greater physical strength and larger brains of our Neanderthal cousins.

Around sixty thousand years ago the number of humans dwindled almost to the point of extinction. Whether caused by disease, the bone-chilling temperatures of an ice age, or an erupting super-volcano, it’s possible that a mere two thousand individuals remained — or, one thousand mating pairs, to use language normally reserved for endangered species.

This near catastrophe was followed by a phase of human evolution kicking into high gear, in an event termed “the great leap forward”. On the back of the development of social interaction, language and trust, we evolved faster and faster. Human tribes increased in size, we travelled more and developed art and culture. It’s theorised that this rapid development of social skills made homo sapiens better able than homo neanderthalis to cope with the inclement weather; our capacity for better parties and cave paintings of mammoth stew helped us outcompete and outlive our otherwise superior relatives.

The evolution hack

“We are machines built by DNA whose purpose is to make more copies of the same DNA. … This is exactly what we are for. We are machines for propagating DNA, and the propagation of DNA is a self-sustaining process. It is every living object’s sole reason for living.”

– Richard Dawkins, The Blind Watchmaker (1986)

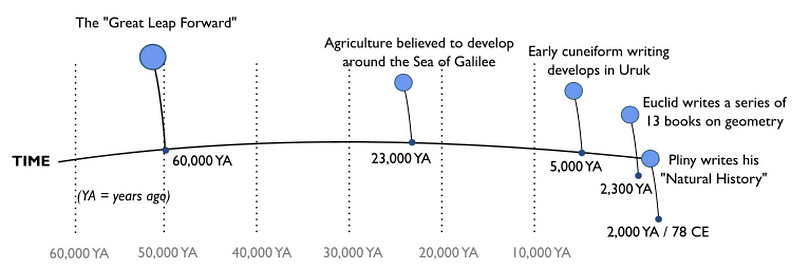

With the rapid development of our capacity for social interaction we stopped being bound by the sub-glacial pace of biological inheritance. Instead, our information came to be passed by society and culture rather than genetics.

Humans had learned to hack the biology game. While most other species were stuck in the slow lane of genetic inheritance, humans started to communicate our improvements between generations with something faster — at first with group norms, then with culture, and eventually with religion, law and education.

Nature herself is adapted, through billions of evolutionary trials and errors, to survive. Survival is made more likely with a great number of competing players, each testing and occasionally improving on the genetic signal that they have been passed in their genome. Each generation modifies, mutates and, very occasionally improves the blueprint passed to the next.

Culture, language and society allowed humans an alternative to genetics and epigenetics. We could suddenly pass a different signal through language, writing, history and science. Each generation started to build on this much more complex signal, and with it the pace of our evolution increased.

First social groups, then language, then agriculture. Then beer, writing, cities, empires and world spanning religions, all in less than ten thousand years.

In 1620, Francis Bacon proposed a ‘new method’ in his Novum Organum. In the blink of an eye, Newton, Darwin, Popper and Einstein had built upon the legacy of scientists and educators before them.

Our signal, this thread of continuing improvement by experimentation, was encoded in illuminated volumes, then printed books. The postal system, the telephone, radio and television all combined to increase the speed at which we learn and share knowledge, and increase the base upon which we build.

By Darwin’s time we were no longer passing knowledge by word of mouth, but comparing scientific theories with — and sometimes stealing them from — colleagues around the world.

The evolution of the network

“The web is more a social creation than a technical one. I designed it for a social effect — to help people work together — and not as a technical toy. The ultimate goal of the Web is to support and improve our weblike existence in the world.”

– Tim Berners-Lee

The crowning glory of humanity’s innate desire to share our signal is most obvious in the Internet, a system by which both humans and machines can communicate. On top of this technological marvel sits Tim Berners-Lee’s wonder, the world wide web.

In 1969, the first message was sent on the ARPANET, the mother of today’s internet. In only fifty years, we’ve created a global network of information sharing in our own image. The internet reflects humanity’s mores; some information is good and some unbelievably awful.

The rocket fuelled development of technology

In creating the network, we have also simultaneously inspired the creation of computers with ever more capability to compute, and robots that can interact directly with a human environment.

The internet has been both recipient and driver of technology, demanding more processing, more storage and faster connectivity. The advent of cloud computing delivered the capability to process massive amounts of data into the hands of even more companies, entrepreneurs, academics and criminals. Suddenly, in only the last few years, machine learning has become democratised, and fashionable cloud providers like Google Cloud Platform, Microsoft Azure and Amazon Web Services groan under the weight of the pre-packaged machine learning and artificial intelligence products. Natural language processing and visual recognition is now just an API call away to anyone with a credit card.

Advances in machine learning have driven advances in machine perception — in visual processing, spatial awareness and haptic feedback. These advances have led to robots that can open doors, and drones that can fly around obstacles with only vision to guide them.

We’re in the epoch of self-driving cars and robot pizza, and each advance compresses the time between each discovery. Not only is technology driving invention forwards, it’s also driving the speed of invention forwards, and with it our capability to pass a signal to future generations. It’s the same tightening feedback loops that human evolution saw sixty thousand years ago.

AI is now hacking the evolution gene

“It is raining DNA outside. On the bank of the Oxford canal at the bottom of my garden is a large willow tree, and it is pumping downy seeds into the air. … [spreading] DNA whose coded characters spell out specific instructions for building willow trees that will shed a new generation of downy seeds. … It is raining instructions out there; it’s raining programs; it’s raining tree-growing, fluff-spreading, algorithms. That is not a metaphor, it is the plain truth. It couldn’t be any plainer if it were raining floppy discs.”

– Richard Dawkins, The Blind Watchmaker (1986)

If speed of change is one measure of adaptability, computers have already proven to be more capable of evolution than humans.

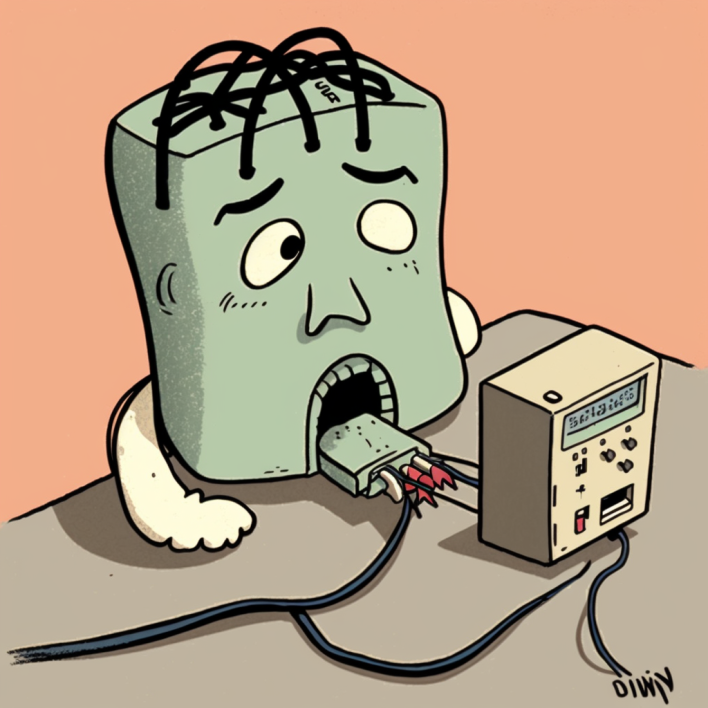

As I write this, there is no day that goes past without a mention of ChatGPT in the news. OpenAI’s Large Language Model (LLM) became the fastest growing app on the internet in January 2023, allegedly reaching 100 million unique visitors within two months of launch. ChatGPT is breath-takingly impressive and capable of seemingly human-like creativity.

While flawed, it is important to remember that ChatGPT, despite its success and news-worthiness, is just one of many LLMs being worked on by tech companies. It is highly likely that other businesses, including Google and China’s Huawei have equivalently powerful LLMs but are being more circumspect about releasing them to the general public.

Large Language Models and other generative AIs, like Dall-E and Midjourney, are possible only because of the enormous datasets of text and images that exist on the internet with which to train them (regardless of the legality of their use). Current machine learning models are voracious — ChatGPT’s training set (the data used to create the model) is many hundreds of billions of words. To create these kinds of novel machine learning models relies — at the moment — directly on the fact that people are writing Wikipedia articles, tweeting abuse on Twitter, submitting photos to Instagram and, at some point, posting their videos to TikTok.

Our current advances are possible only because of massive increases in processing power and the open, public, standards-based sharing of knowledge and data via the Internet. It is thanks to our explosion of interconnectedness that code surpasses culture as a means to pass on and refine our signal.

What we are calling Artificial Intelligence is the ultimate expression of mankind’s history of outwitting biological evolution. We now understand that the development of human brains was not due to tool use, but rather the development of our social networks and collaboration. In the same way it is not just the existence of more powerful processors, but the connections between our information systems that is allowing machine learning to advance at such incredible pace. Like it or not, we are going to continue to see the feedback loops tighten, with artificial intelligence and synthetic creativity improving ever faster. The next ChatGPT-style phenomena is now probably only months away.

I, Robot

“you just can’t differentiate between a robot and the very best of humans.”

― Isaac Asimov

I don’t intend to make the case that artificial intelligence is currently anywhere close to the possession of the necessary qualities of what is called artificial general intelligence (AGI). The ‘Singularity’, a philosophical concept illustrating the point at which AI becomes broadly comparable (or indistinguishable) from human cognition, is likely decades away — if we can keep ourselves and our planet alive that long.

However, the ability of software to evolve and adapt so much more quickly than genetics or culture, and the rate of technological improvements in hardware, storage and networking (for the moment, leaving aside the mind-boggling promise of quantum computing), makes some form of artificial general intelligence inevitable.

Once reached, the likelihood that this artificial intelligence will surpass our own is nearly certain. Even if we believe that our own intellectual dominance extends far beyond what is currently possible with AI, it’s hard to pretend that there isn’t a point where the plotted lines of cognitive capability don’t cross.

Humans will be supplanted from our role as the intellectual apex predator simply because code can adapt faster than flesh. Where we are limited by our biology, synthetic organisms can be designed with single-minded purpose and then, if necessary, have their very selves transmitted to a new, fitter and faster host. Unconstrained by biology, the learning potential of these systems is nearly boundless.

So what are you really saying?

The development of social interaction, collaboration and culture over the last 60,000 years has allowed humans to hack the system of biological inheritance. We’re no longer simply passing on our genetic information, but rather digitising, storing and sharing the entire corpus of human knowledge through a near-instantaneous worldwide network. That information isn’t just accessible to humans, but also to machines, who we can train to incorporate those millennia of learning.

The heart of this matter is the speed of inexorable change. With advancements in technology, artificial intelligence has the nearly certain potential to surpass human cognition — a statement really of not ‘if’ but ‘when’. The question is; what are you doing to prepare?

A footnote

The implications of this change are worrying and thrilling in equal measure. Should we manage to preserve the planet for long enough to power the machines to reach the point of equilibrium, how will we share our space with our new neighbours?

Given the rather poor job that humans have done protecting our uniquely cosseting planet, I’m not overly concerned with the possibility that humans will lose our dominant place on Earth. My hope is that AI will surpass the best of humanity, and be responsible for finding a way for all of Gaia’s creations to continue to enjoy our beautiful pinprick in the dark velvet of the universe.

Evolution is not cruel or kind, but single-minded in its mission to create survivors. The myriad survivors of nature are beautiful and wonderful, and my hope is that non-organic life will be too.

As Deckard put it,

“Replicants are like any other machine. They’re either a benefit or a hazard. If they’re a benefit, it’s not my problem.”

Deckard, Ridley Scott’s Bladerunner

— Mark