How Generative AI will impact product engineering teams — Part 2

This is the second part of a six part series investigating how generative AI productivity tools aimed at developers, like Github Copilot, ChatGPT and Amazon CodeWhisperer might impact the structure of entire product engineering teams. In Part 1, we explored:- The landscape of product engineering and the possibility that teams will need fewer human engineers with the rise of Generative AI tools.

- The traditional 5:1 Ratio in tech teams: how roughly five engineers for every product manager is common across the industry.

- The roles of product managers and engineers in current product development processes and how these roles might shift with AI advancements.

- How past research has given flawed predictions on which professions will be the least impacted by AI, and how LLMs have upended these predictions, particularly for the tech and creative industries.

The explosion of AI coding tools

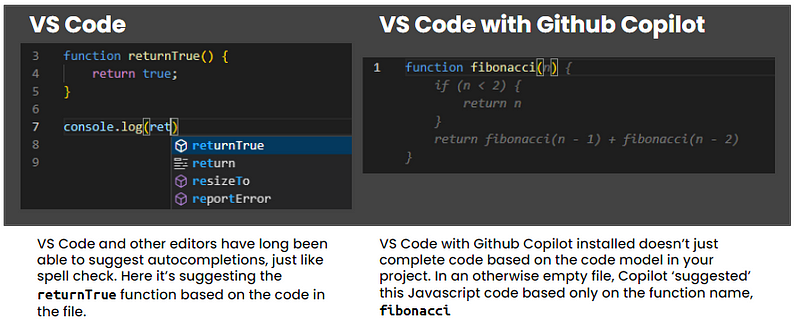

Automation has been a part of software engineering for almost as long as there has been software engineering. Eric Raymond’s 2003 landmark essay, The Art of Unix Programming reflected on 17 design rules for software engineers, including the Rule of Generation: “Avoid hand-hacking; write programs to write programs when you can”. Raymond’s advice is still relevant even 20 years after it was published:“Human beings are notoriously bad at sweating the details. Accordingly, any kind of hand-hacking of programs is a rich source of delays and errors. The simpler and more abstracted your program specification can be, the more likely it is that the human designer will have gotten it right. Generated code (at every level) is almost always cheaper and more reliable than hand-hacked.”Since Raymond wrote those words we have developed automated test tools, linters (tools which automatically check the code we write), auto-completion for our development environments (like a spell-checker, but for code) and even frameworks (like React and Django) which automate the creation of a large amount of basic, boilerplate code for generic applications like websites and mobile applications. For the most part developers have reveled in automation, while always being quietly confident that our experience, skill and creative uniqueness would make us hard to replace. It didn’t help that McKinsey and their peers were also telling us that we’d be safe from the clutches of AI automation. The most recent additions to the code automation space are a group of tools that offer to sit alongside developers as an equal, and look eminently capable of progressing far further than we had previously imagined. Much like Raymond’s 17th Principle, it’s possible that these tools will write complex software better than humans. Probably the prima donna of developer assistance tools as I write is Github Copilot, which unblinkingly markets itself as a pair programmer (the term given to a human coding buddy, who sits alongside you and co-authors your code). Copilot was released to developers in June 2022, and its younger and more charming sibling, Copilot X, was announced in March this year. Github has made what appear to be entirely reasonable claims that Copilot increases developer productivity, with their study reporting that the tool reduces the time to complete engineering tasks by up to 55%. Github (don’t forget that this is a company owned by Microsoft, who also own a rumoured 45% stake in ChatGPT makers, OpenAI) isn’t alone in this space. Amazon CodeWhisperer was also announced last year, with similar claims of a 57% increase in developer productivity. Like Copilot, CodeWhisperer is accessible in the engineer’s development environment (IDE) and can correct, comment, explain and write code.

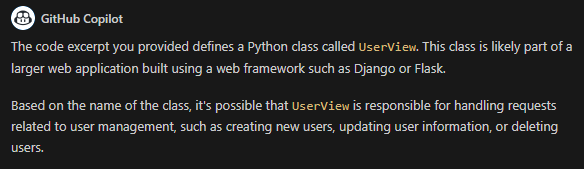

“The secret is that we don’t just have to provide the model with the original file that the GitHub Copilot user is currently editing; instead we look for additional pieces of context inside the IDE that can hint the model towards better completions.”It’s this wider context that these developer tools can exploit — the IDE, the files available on the developer’s machine, the code repositories in git and even, potentially, the documentation for the application — that make them so powerful. This broad context lowers the skill levels required for developers to ‘prompt engineer’ solutions from the AI. Examples to improve prompts are available to the AI tools from directly within the environment they operate, and also from the billions of lines of pre-existing code they were trained on. This gives the LLM a good chance of being able to create not just an appropriate output, but one that exceeds the capabilities of an average developer. With Copilot X, the code interface itself is no longer the only palette the AI has to work with, and it’s also no longer restricted to just auto-generating code. A user can highlight code in their development environment, and simply ask for an explanation of what the code is doing:

How will Product Engineering teams use these tools?

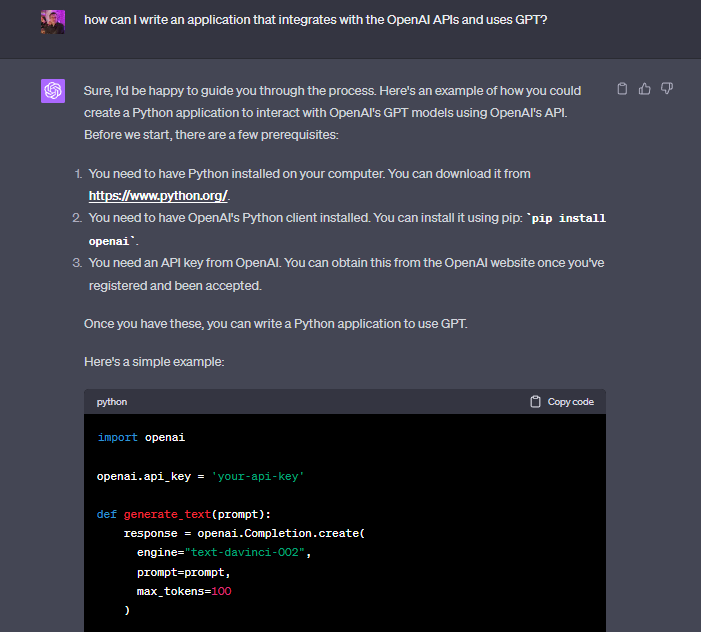

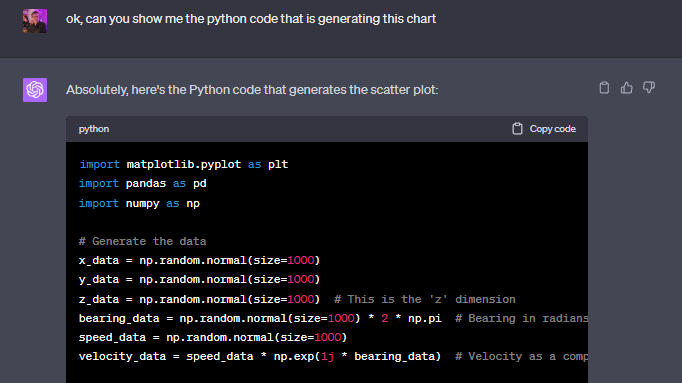

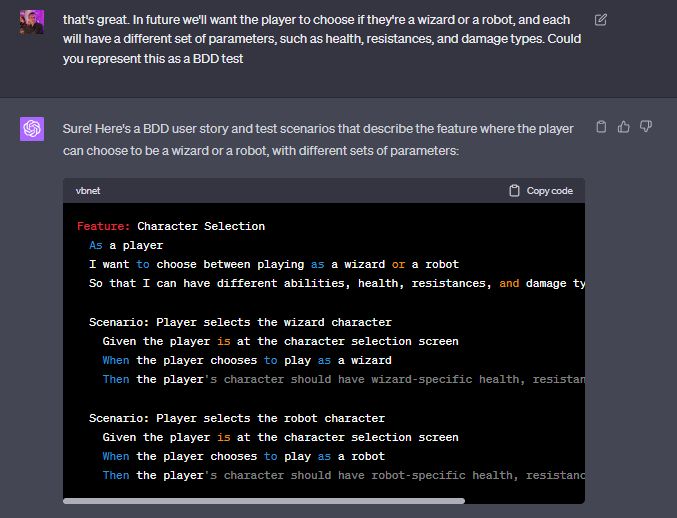

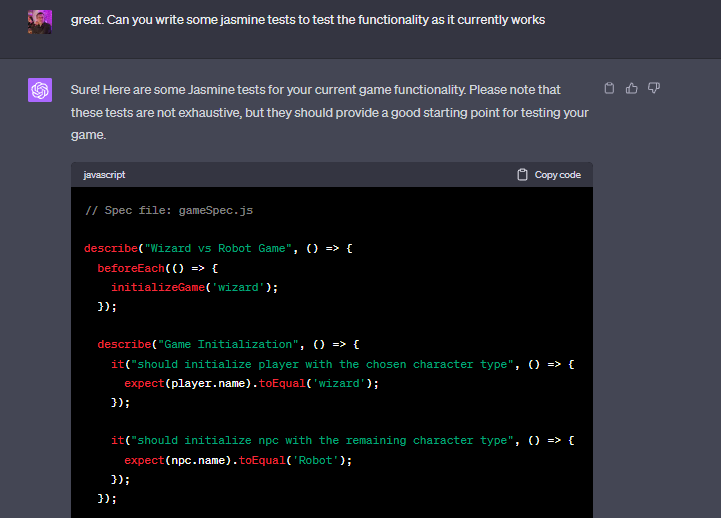

The first time that I spent a serious amount of time generating code with ChatGPT, I was actually asking it to generate some user stories. A friend had asked me for some help writing an application, and with my professional coding days now long behind me, I needed some help. My thought process was reasonably simple: “I can’t code anymore, but I can probably describe what is needed in a way that a developer can understand so we can hire a freelancer to actually write the code”. I explained to ChatGPT that I wanted it to create some behaviour driven design (BDD) stories. BDD is a reasonably well adopted approach to development that serves as an intermediary between what the customer (and the product manager) wants, and the code that the engineer will write. It’s a good test of your own understanding of a problem to write a BDD story, so naturally, I just got ChatGPT to do it for me.

https://platform.twitter.com/widgets.js

X : What’s the best way of writing a specification for a commodity? Me : Your test suite. X : Eh? Me : Every novel thing starts with a few basic tests, as it evolves it gains more, your product should be built on those test and eventually they should help define the commodity.

https://platform.twitter.com/widgets.js

Me : Your business, hardware and software should have test driven development baked in throughout wherever possible. How do you change anything in a complicated environment without it. Every single line on a map is a relationship, an interface for which there should be tests …

https://platform.twitter.com/widgets.js

… those tests define the operation of the interface, they are the specification you hand to another, that you check a product against, that you test a commodity with and then determine the trades you wish to make. Those test should expand and evolve with the thing itself.Although tests aren’t as exciting to write as application code, and are often seen as cumbersome to maintain, good tests demonstrate the anticipated behaviour of the application. Even more importantly, tests don’t just describe how something should behave, they also demonstrate that it really does behave that way repeatedly. The definition of the scope and the details of a really valuable test suite is part of the collaborative work that product and engineering teams do together. Simon’s tweet inspired me to reflect that, perhaps, the future of a prompt engineered application starts with thoughtful test design. From this perspective, we can start to envision a future for product engineering teams that embrace Generative AI tools. The job of eliciting customer requirements, understanding potential solutions, analysing the value that will be created by serving the customer and prioritising work will remain just as important as they are today. Product management will no doubt benefit from Generative AI tools, but I suspect the impact for the product discipline will be less shocking than it is for tech teams. Which brings us back to those five engineers.

In Part 3, you can read about:

- How Generative AI tools could potentially upend the longstanding ratio of 5 engineers to 1 product manager.

- How tools like Github Copilot and AWS Amplify Studio could reshape product development, shifting the engineer’s focus from hand coding to design, architecture, and integration.

- How Generative AI tools can assist teams who are facing painfully outdated tech, handling complex porting and refactoring effortlessly.

- The possible unifying influence of AI tools on mobile and web app development, reducing duplicated efforts and bridging the skill gaps between web, Android, and iOS development.

- The impact of coding automation on junior developers and engineering progression

- Part 1: How Generative AI Will Impact Product Engineering Teams

- Part 2: The proliferation of Generative AI Coding Tools and how Product Engineering teams will use them

- Part 3: If engineers start to use AI coding tools, what happens to our product teams?

- Part 4: If AI coding tools reduce the number of engineers we need, where do we spend our budgets?

- Part 5: Who wins and who loses? How different types of business could be impacted by AI tools.

- Part 6: The Best of Both Worlds: Human Developers and AI Collaborators

P.S. If you’re enjoying these articles on teams, check out my Teamcraft podcast, where my co-host Andrew Maclaren and I talk to guests about what makes teams work.